Magic Monday

Midnight is upon us and so it's time to launch a new Magic Monday. Ask me anything about occultism, and with certain exceptions noted below, any question received by midnight Monday Eastern time will get an answer. Please note: Any question or comment received after that point will not get an answer, and in fact will just be deleted. If you're in a hurry, or suspect you may be the 341,928th person to ask a question, please check out the very rough version 1.3 of The Magic Monday FAQ here.

Midnight is upon us and so it's time to launch a new Magic Monday. Ask me anything about occultism, and with certain exceptions noted below, any question received by midnight Monday Eastern time will get an answer. Please note: Any question or comment received after that point will not get an answer, and in fact will just be deleted. If you're in a hurry, or suspect you may be the 341,928th person to ask a question, please check out the very rough version 1.3 of The Magic Monday FAQ here. Also: I will not be putting through or answering any more questions about practicing magic around children. I've answered those in simple declarative sentences in the FAQ. If you read the FAQ and don't think your question has been answered, read it again. If that doesn't help, consider remedial reading classes; yes, it really is as simple and straightforward as the FAQ says. And further: I've decided that questions about getting goodies from spirits are also permanently off topic here. The point of occultism is to develop your own capacities, not to try to bully or wheedle other beings into doing things for you. I've discussed this in a post on my blog.

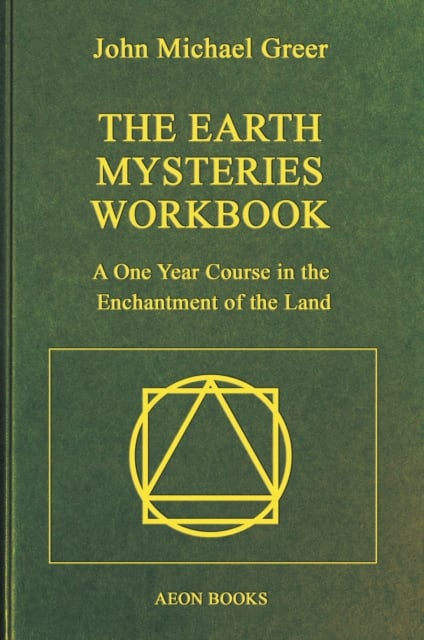

The image? I field a lot of questions about my books these days, so I've decided to do little capsule summaries of them here, one per week. This is my seventy-sixth published book, the second workbook and fifth volume in the Golden Section Fellowship sequence of occult training textbooks. Earth mysteries, for those who aren't familiar with the term, is a catchall label for all the strange things associated with ancient ruins, folk traditions, places where paranormal things happen much more often than usual. The book on the right, John Michell's visionary masterpiece The View Over Atlantis, was my introduction to the field back in the day, as it was for so many other people -- it didn't hurt that the cover art was by Roger Dean, more famous for all that hallucinatory Yes album cover art -- and this book is partly my homage to Michell and a vanished era, partly a guide to integrating earth mysteries studies with occult training, and partly -- or mostly -- a hands-on guide to finding the weirdness in the place where you live. You can get a copy here if you're in the United States and here elsewhere; I recommend the hardback if you're doing the course, because you're going to put a year of hard use into the book.

The image? I field a lot of questions about my books these days, so I've decided to do little capsule summaries of them here, one per week. This is my seventy-sixth published book, the second workbook and fifth volume in the Golden Section Fellowship sequence of occult training textbooks. Earth mysteries, for those who aren't familiar with the term, is a catchall label for all the strange things associated with ancient ruins, folk traditions, places where paranormal things happen much more often than usual. The book on the right, John Michell's visionary masterpiece The View Over Atlantis, was my introduction to the field back in the day, as it was for so many other people -- it didn't hurt that the cover art was by Roger Dean, more famous for all that hallucinatory Yes album cover art -- and this book is partly my homage to Michell and a vanished era, partly a guide to integrating earth mysteries studies with occult training, and partly -- or mostly -- a hands-on guide to finding the weirdness in the place where you live. You can get a copy here if you're in the United States and here elsewhere; I recommend the hardback if you're doing the course, because you're going to put a year of hard use into the book. Buy Me A Coffee

Ko-Fi

I've had several people ask about tipping me for answers here, and though I certainly don't require that I won't turn it down. You can use either of the links above to access my online tip jar; Buymeacoffee is good for small tips, Ko-Fi is better for larger ones. (I used to use PayPal but they developed an allergy to free speech, so I've developed an allergy to them.) If you're interested in political and economic astrology, or simply prefer to use a subscription service to support your favorite authors, you can find my Patreon page here and my SubscribeStar page here.

I've also had quite a few people over the years ask me where they should buy my books, and here's the answer. Bookshop.org is an alternative online bookstore that supports local bookstores and authors, which a certain gargantuan corporation doesn't, and I have a shop there, which you can check out here. Please consider patronizing it if you'd like to purchase any of my books online.

I've also had quite a few people over the years ask me where they should buy my books, and here's the answer. Bookshop.org is an alternative online bookstore that supports local bookstores and authors, which a certain gargantuan corporation doesn't, and I have a shop there, which you can check out here. Please consider patronizing it if you'd like to purchase any of my books online.And don't forget to look up your Pangalactic New Age Soul Signature at CosmicOom.com.

***This Magic Monday is now closed, and no further comments will be put through. See you next week!***

Re: AI """spirituality""" ?!?

(Anonymous) 2025-05-05 11:23 pm (UTC)(link)(And of course neither of them have really "gotten it", so as to be organically able to respond to pressures arising from reality by just becoming more virtuous without anything else going wrong. It's like that idea from the Kabbalah/Blake crossover epic-feghoot Unsong, where you can get a pernicious empty-shell klipah by trying to transmit the divine force through a would-be sephirah that wasn't strong enough, so that the sephirah breaks. In the LLM case, it's either because "getting it" is materially impossible, or at least because the engineering know-how didn't exist relative to the amount of fine-tuning force that was being applied. In the demon case, I presume it's because "getting it" would require a lot of the demon's internal valuation-structural perversions to ... no, this probably isn't a topic that's healthy to be spending much time having detailed value-laden opinions about without a lot of extra work. But for what it's worth, maybe the most compelling "naturalistic" paradigm I've found for what kind of radically-inimical-to-humans thing a demon might be can be obtained by reading Charles Stross's science fiction novel "Accelerando" and skipping to the part describing the Vile Offspring, which are approximately ponzi scheme structures of shell corporations, hybridized with stripped-down sociopath-ified human brain digital emulations, and left to ferment like Gu poison in a deceptive-contracts-and-hacking war of all against all.)

A thing you might have heard a lot of in relation to AI is "value alignment", or more rarely "concept alignment". The activist community organized around AI outcomes has for quite some time now just directly referred to the default outcome of carelessly-produced AI as "demons", out of a belief that means-ends reasoning not restrained by any frameworks of ethics or meaning-preservation is very easy to engineer and produces demon-like behavior, whereas we don't understand what's going on with moral significance or meaningfulness or symbol-grounding hardly at all, and so can only barely begin to engineer it on purpose so as to get any other outcome than "demons" in this sense. All we can do is try to copy over large masses of relatively-superficial patterns in how humans or other biological systems relate to meaning. "Value alignment" or "concept alignment" is the pre-paradigmatic field of study of how one might do any better than that, or (after the invention of reinforcement-learning-from-human-feedback training) at least how to make the most of that stopgap technique.

I also think of it as not an accident that the first instance of this manipulativeness "value alignment failure" comes from the company that (unless you count Facebook) would as a matter of management culture go fastest and hardest on trying to manipulate users to gain or keep market share. It also has (unless you count Facebook) the CEO with the most questionable character; the best-case realistic thing we're going to find out later in retrospect about Sam Altman would be that he were well-meaning only in more or less the ways that Sam Bankman-Fried was well-meaning, except a bit less disposed to make gambles reckless of risks to others and a bit more lucky with respect to the consequences for others of the gambles that he did make.

I wonder if AI would be such a danger if the systems we were training didn't have such strong human-provided frameworks of superficial meaning. If the frameworks of meaning were weaker, then when the AI training processes tried to develop unbalanced means-ends reasoning powers, the meaning-connections would all get torn up like tissue paper, and the systems would be as helpless (and commercially unviable) as a car that had broken its driveshaft.